Use case

Optimized MongoDB Synchronization with Jenkins and Python

- Vishalkumar Chaurasia

About the Customer

The customer is an innovative company specializing in assessing the quality of fruits and vegetables. The product involves a comprehensive analysis of fresh produce, offering detailed insights into their quality. Available on both Android and iOS platforms, their product leverages cutting-edge machine learning tools and AI-powered technology to evaluate fruit quality. The company aims to revolutionize the global fresh produce supply chain by enhancing quality assurance processes through robust assessments.

Customer Challenge

The customer initially relied on AWS Lambda functions written in TypeScript to handle its MongoDB data synchronization. However, using each Lambda as an isolated microservice introduced several challenges related to scalability and maintainability.

The 15-minute execution timeout of AWS Lambda became a major bottleneck when syncing large datasets, forcing the orchestration of multiple Lambdas to complete a single synchronisation cycle.

This approach made debugging difficult due to the lack of a continuous execution context across functions. Additionally, the absence of shared context complicated retries of failed tasks, often resulting in data duplication or loss.

Overall, this architecture increased operational complexity and made it challenging to guarantee reliable, end-to-end data integrity.

Solution

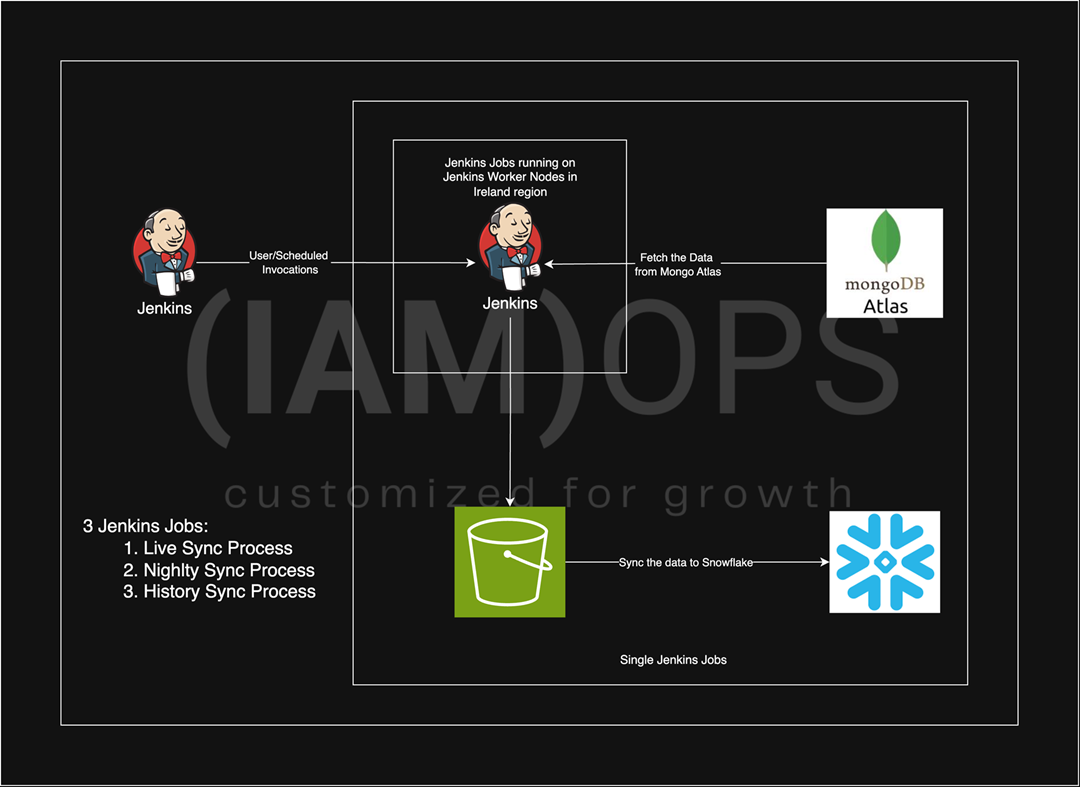

To overcome the challenges of the fragmented Lambda-based approach, IAMOPS implemented a centralized and robust solution leveraging Jenkins. The key steps involved were:

- Centralizing the Synchronization Workflow:

A single Jenkins job was developed to orchestrate the entire data synchronization process, managing data flow seamlessly from MongoDB to AWS S3 and Snowflake. This replaced the multiple disparate Lambda microservices with a unified pipeline. - Implementing Fault-Tolerant Execution:

The Jenkins job was designed to be fault-tolerant by tracking progress using MongoDB’s modified date field. In case of errors, the job can resume from the last successful timestamp, preventing data duplication and enabling smooth recovery without restarting the entire sync. - Optimizing Incremental Synchronization:

By leveraging the modified date field in MongoDB documents, the solution performs incremental data syncing. This significantly reduces runtime and improves efficiency compared to full dataset syncs. - Securing the Infrastructure:

The Jenkins environment was deployed with a master and multiple worker nodes within private AWS subnets, protected via VPN access. IAM roles follow the principle of least privilege to minimize risk. Sensitive data such as credentials and connection strings are securely stored in AWS SSM Parameter Store. - Establishing a Safe Development Workflow:

A dedicated debug environment was set up to allow safe testing of pipeline changes and improvements without impacting production data or processes. - Aligning with AWS Well-Architected Framework:

The entire solution was designed in accordance with AWS best practices, addressing all six pillars: operational excellence, security, reliability, performance efficiency, cost optimization, and sustainability. This includes parameterized and automated Jenkins jobs, environment segregation for security, use of cost-efficient t3.medium EC2 instances, and minimizing cross-region data transfers.

This structured approach ensured a scalable, reliable, and secure synchronization process with improved observability and maintainability.

Results & Benefits

- Enhanced Reliability and Maintainability:

Centralizing the workflow in Jenkins drastically improved the reliability and maintainability of the synchronization process. Incremental updates using MongoDB’s modified date field reduced synchronization time by 40%, optimizing compute resource utilization and lowering execution costs. - Increased Operational Efficiency:

Automation and centralization of the job significantly reduced the need for manual intervention, resulting in an estimated saving of 20 work hours per week for the operations team. - Cost Optimization:

Utilizing cost-effective t3.medium EC2 instances for Jenkins worker nodes helped optimize infrastructure expenses. Additionally, co-locating AWS S3 and Snowflake within the same region minimized data transfer costs, further contributing to overall savings.

About IAMOPS

IAMOPS is a full DevOps suite company that supports technology companies to achieve intense production readiness.

Our mission is to ensure that our clients’ infrastructure and CI/CD pipelines are scalable, mitigate failure points, optimize performance, ensure uptime, and minimize costs.

Our DevOps suite includes DevOps Core, NOC 24/7, FinOps, QA Automation, and DevSecOps to accelerate overall exponential growth.

As an AWS Advanced Tier Partner and Reseller, we focus on two key pillars: Professionalism by adhering to best practices and utilizing advanced technologies, Customer Experience with responsiveness, availability, clear project management, and transparency to provide an exceptional experience for our clients.